|

Online Learning System Using Job Definitions

Many problems require the constant refresh of the training dataset followed by a model update and finally some type of

model scoring or prediction. Usually this type of system requires sophisticated and expensive software built specifically for the problem at hand.

This case study shows how to create a very good approximation to custom-built systems

using GeneXproServer and a few simple scripts.

This case study demonstrates one way of solving this problem using the GeneXproServer job definitions. This solution requires some programming to download the data from an external source (Quandl.com,

in this case) and its transformation as well as some file management and processing

of the results. Some of these steps may be optional or not applicable in different problems but it should be easy to remove or add other steps.

The Problem

The solution we will build does the following steps during normal processing:

-

Download the latest IBM daily stock price data from

Quandl.com.

-

Extract the Close column.

-

Smooth the extracted data by applying a moving average.

-

Load the resulting moving average into a GeneXproTools run.

-

Process the data for a number of generations to

create the model.

-

Predict the Close value of the IBM stock for the following day.

-

Repeat every day.

To setup this system we will need:

-

A computer with the following software:

- GeneXproTools 5.0

- GeneXproServer 5.0

-

A Python environment with Numpy and Pandas installed such as the Anaconda distribution plus Quandl’s Python API tools.

-

An initial extract of the latest IBM stock data.

-

A GeneXproTools Time Series Prediction model optimized for the IBM stock data.

Installation and Setup

If you installed the Anaconda distribution then you will already have pip (a python package manager) installed and

ready to use to install Quandl’s Python API (if you don’t, please consult Quandl’s documentation for other options).

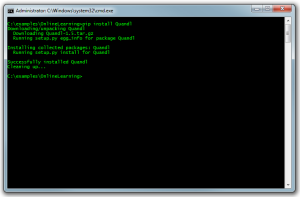

Open a command line and type the following:

pip install Quandl

The following image should be similar to what you see on your screen:

Downloading and Transforming

Data

Next we need to create the Python script that downloads IBM’s stock data from Quandl.com and processes the data as explained above.

You can also download the python file to see the code.

The script starts by downloading the latest IBM stock data, then creates a moving average with a window of 10 for the

Close and saves the moving average to a text file named latest_moving_average.csv.

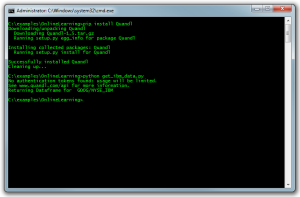

To run the script go back to the command line and run the following command:

python get_ibm_data.py

You should get the following feedback:

And the folder contents should be:

If you look in the latest_moving_average.csv file you will find a single column of data with the

moving average of the Close of the IBM stock.

Creating the Template GeneXproTools

File

Open GeneXproTools and create a new Time Series Prediction

run using the latest_moving_average.csv file that was created by the Python script. For this specific data set the default settings are quite good but a different problem may require adjustments in the settings,

especially the embedding dimension or delay time, or in the window of the moving average. If you prefer you can download the

sample gep file.

The Job Definition File

We will use the job definition to load the new data,

refresh the existing model and create the next prediction. We will also need to do some clean up at the end to allow the repetition of the process and we will also calculate the

raw prediction for the Close from the model prediction since it is a prediction of the moving average. These final processes will also be done in Python.

We start by defining the job node:

<job filename="IBMStockClose.gep"

path="C:\examples\OnlineLearning"

feedback="2"

usesubfolder="2"

subfoldername="tmp"

>

</job>

The job node starts with the GeneXproTools file, followed by the current folder and the feedback which is set to two seconds. We also set the folder C:\examples\OnlineLearning\tmp to be the place where all intermediate files are saved by using a usesubfolder of 2 (Fixed). Every time this job runs the folder tmp is deleted and recreated by GeneXproServer.

Then we add a pre-processing directive that will run the get_ibm_data.py script. This script is responsible for downloading the latest data from Quandl.com, converting it to a moving average and saving it in a format that can be loaded by GeneXproServer (a single column with a header in the first line). We are calling the preprocessing.bat batch file which

then calls the python script. This is a good way to allow for adding other scripts in the future when required.

<preprocessing path="C:\examples\OnlineLearning\preprocessing.bat"

hide="yes"

synchro="yes"/>

Then we start the run node. We are setting the run to process for 200 generations using the selected model as seed. This means that every time we run this job the model is refreshed using the latest data.

<run id="1" type="continue" stopcondition="generations" value="200">

Before the run can be processed we need to load the latest data that was downloaded in the pre-processing step. To do this we add a dataset directive to load the data into the run:

<datasets>

<dataset type="training" records="all">

<connection type="file" format="timeseries">

<path separator="tab" haslabels="yes">

C:\examples\OnlineLearning\latest_moving_average.csv

</path>

</connection>

</dataset>

</datasets>

Also inside the run node we add the new prediction directive which saves the forecasted value to the file prediction.txt in the tmp folder:

<predict quantity="1" format="text" filename="prediction.txt" />

Finally we run the post-processing script that extracts the generated prediction of the moving average and calculates the corresponding raw value. This is done indirectly by calling

a python script from a batch file much like

what was done in the pre-processing case:

<postprocessing path="C:\examples\OnlineLearning\postprocessing.bat"

hide="yes"

synchro="yes"/>

This python script is rather more complex than the previous one because it also does a number of different things. It calculates the raw value from the predicted moving average, updates the history.csv file with this value (this file stores the actual values and predictions for later analysis), calculates the absolute and relative errors of the prediction of the previous day and prints them to the screen and, finally, replaces the IBMStockClose.gep file with the updated file that contains the latest data and model leaving everything ready for the next cycle.

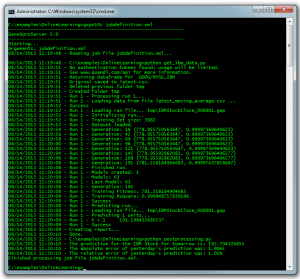

To test the job definition open a command line, navigate to the folder C:\examples\OnlineLearning and type:

gxps50c jobdefinition.xml

And press enter. You should get results similar to this image:

Finally, all that is left is to automate the process to

run once a day except on weekends. We suggest that you

use the Windows Task Scheduler for this end as described in this web page.

Installing the Service to a

Different Folder and Initializing it

To install this system to a different location you will need to update all the paths in the jobdefinition.xml file to the new path. If you are moving it to a different computer don't forget to install the necessary dependencies as described at the beginning of this article.

The only initialization required is to update the last line of the history.csv

file. The contents of the history.csv file shipped with this article is similar to:

2013-08-08 00:00:00,199.92211,199.89

2013-08-09 00:00:00,188.43857,187.82

2013-08-12 00:00:00,183.15527,

Note that the last line is missing the Actual value. This is on purpose as the post-processing scripts expects this to be the case. You can change the dates or you can leave it unchanged and ignore the first records when analyzing the performance of your predictions over time.

File Download

All the files used above can be downloaded from here. This is a zip file that should be extracted to the folder c:\examples\OnlineLearning.

See Also:

Related Tutorials:

Related Videos:

|