Some of the most important new features that we introduced in GeneXproTools 5.0 include different methods for dataset partitioning and subsampling. Now with Mini-Release 2 "New Project: Cross-Validation, Var Importance & More" we are building up on these methods to implement what I call Bootstrap Cross-Validation.

The Bootstrap Cross-Validation technique consists of evaluating a particular measure of fit, for example, the classification accuracy or the R-square of a model, across k different random samples of a specific dataset (training or validation/test dataset) and then averaging the results for the k folds. For each dataset, the random sampling is done with replacement using the number of records chosen by the user in the Settings Panel for the training and validation/test datasets.

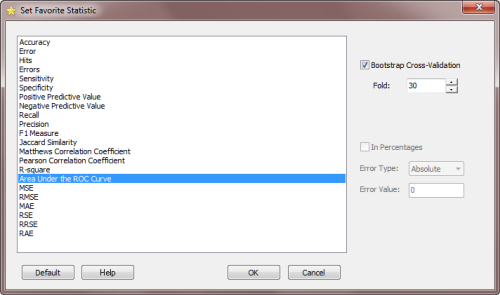

The Bootstrap Cross-Validation technique is implemented through the Favorite Statistics Window, allowing you to apply this powerful technique to a wide range of measures of fit. The Bootstrap Cross-Validation technique is available for Regression, Classification and Logistic Regression.

Furthermore, we've also extended the Bootstrap Cross-Validation technique to the fitness evaluation, which means that you also have access to cross-validation results for a wide range of fitness function measures, including custom fitness functions.

So, in conclusion, the Bootstrap Cross-Validation technique is a powerful tool for model selection as it allows you to cross-validate model performance across a wide range of performance metrics, including all the Favorite Statistics and Fitness Functions available for each modeling category and also User Defined Statistics through Custom Fitness Functions.

So, whether you have a big dataset or a small one, you can make the most of it to help you in the selection of the very best model using cross-validation. For example, if you have a big dataset, say, 20k records, and want to both speed up testing and a more accurate measure for the generalization error, you can use instead just 2k records in a 30-fold cross-validation. If, on the other hand, you have a small dataset and are afraid that your validation/test dataset is not representative of the sample population, by using Bootstrap Cross-Validation on the validation/test or on the entire dataset you can increase the odds of selecting the very best model.

The classical cross-validation technique was developed to deal with model overfitting that plagues different algorithms, from Decision Trees to Linear Regression. Model overfitting is not much of an issue in Gene Expression Programming, but still I hope you’ll find this adaptation of cross-validation to the evolutionary context of model building and selection in GeneXproTools a valuable and powerful tool.

Comments

There are currently no comments on this article.

Comment

your_ip_is_blacklisted_by sbl.spamhaus.org